Intro:

There are some people who seem to have a mysterious ability to grasp complex technical topics easily. You know the people I’m talking about; the ones who quickly whip up custom SMT solvers in Rust on the weekends, the ones who casually say things like “concolic execution” and “Huffman tree” without batting an eye. The ones who manage to somehow find use after frees in seemingly unrelated parts of enormous codebases. In other words, the Smart People.

I, on the other hand, am not one of the Smart People. I wouldn’t know where to begin with writing an SMT solver. I’ve read about concolic execution before and I still could barely tell you a thing about it if my life depended on it. I’m more in the category of People Who Try to Make Up For Their Deficiencies Through Sheer Force of Will, which doesn’t have quite the same ring to it.

However, I recently became interested in exploiting JavaScript Just in Time (JIT) compilers in browsers, which is a topic that seemed relegated only to the Smart People. I spent a long time delaying digging into this topic because I assumed it would be too difficult to make any progress. As it turns out, it’s an interesting topic that doesn’t need to be quite so intimidating, and I wish I’d committed to learning about it earlier.

Goal:

My goal with this blog post is to provide a clear, gentle introduction to the role of JIT compilers in JavaScript engines, some types of security vulnerabilities that might arise from the use of JIT compilers, and tooling to use to aid in analyzing these bugs. Hopefully, this will help others consider that this topic isn’t as impossibly difficult as it might appear. This is not intended to be a comprehensive guide to JIT exploitation; this blog is just trying to make a complex topic more approachable and friendly so that others feel confident enough to dive deeper.

Throughout this post, I’ll be using D8, the standalone version of V8, the JS engine used in Chrome. Much of the content we’ll be covering is not specific to V8, but as we get into specific details, we’ll focus on V8. The example vulnerability we’ll be covering is also from a V8 CTF challenge.

Knowledge prerequisites:

You don’t need to already be familiar with JIT compilers or JavaScript engines; this post doesn’t assume knowledge of those topics. It’s helpful if you:

-Are familiar with traditional memory corruption issues such as out-of-bounds reads and writes and type confusions.

-Are at least passingly familiar with JavaScript.

-Are comfortable using a debugger such as GDB.

-Build V8 from source so you can follow along with the examples.

Helpful existing work:

Some good news is that there’s already quite a lot of existing public research on JIT vulnerabilities and exploitation. The following resources are just a few of the many helpful ones out there; they are not prerequisites for reading this blog post by any means, but if you find that you’re interested in this topic, you’re encouraged to read them:

https://saelo.github.io/presentations/blackhat_us_18_attacking_client_side_jit_compilers.pdf

This slide deck introduces JavaScript engine and JIT compiler concepts and shows example JIT vulnerabilities.

https://doar-e.github.io/blog/2019/01/28/introduction-to-turbofan/

This post provides V8-specific information by walking through TurboFan, a JIT compiler in V8. It also shows usage of Turbolizer, a useful tool for visualizing TurboFan’s behavior.

This presentation discusses common attacks on JavaScript engines and covers typical exploitation strategies.

https://www.madstacks.dev/posts/V8-Exploitation-Series-Part-4/

This post (which is part of a larger series that’s also worth your time!) discusses TurboFan and includes some tips on how to analyze it yourself.

https://docs.google.com/presentation/d/1DJcWByz11jLoQyNhmOvkZSrkgcVhllIlCHmal1tGzaw/edit#slide=id.p

This presentation begins with some background on TurboFan and then dives into specific bug and exploitation examples.

This post (which is the first in a three-part series) provides some information about TurboFan and its optimization phases.

Some of these resources will be referenced more than once in this blog post.

Intro to JS engines and JIT compilers:

Let’s begin with a simplified overview of JavaScript engines and JIT compilers before we dive into the more technical details In a web browser, the JavaScript engine is the component responsible for executing the JavaScript code embedded in websites you visit. For example, let’s say you visit example.com and the website includes some JavaScript code; the JavaScript engine will run the code. JS engines are generally written in C or C++. Importantly, JS engines are frequently exploited components of browsers, since the engines are very complex and must run untrusted code by design.

A JIT compiler is a component of a JavaScript engine that’s responsible for making performance optimizations. To understand the role of a JIT compiler, let’s first consider how a JS engine operates without a JIT compiler. When a JS engine is provided with JS code to execute, the code will be parsed and converted to an abstract syntax tree, and then ultimately converted into bytecode which will be executed by the JS engine’s interpreter. We’ll call this the baseline interpreter. In V8, the baseline interpreter is called Ignition. At this stage, the JS bytecode can be considered unoptimized. It’s very possible for security vulnerabilities to occur during these stages, but we’re specifically interested in tackling JIT compilers in this post, so we won’t discuss baseline interpreter vulnerabilities here.

The baseline interpreter is actually sufficient to have a functional environment for executing JS; JIT compilers are just optional optimizing components, and aren’t strictly necessary. In fact, some JS engines that are not used in browsers may not feature JIT compilers at all. Modern browsers also allow users to disable JIT compilation if they wish. However, browser vendors are generally quite invested in making their browsers as performant as possible, and running JS via only a baseline interpreter is considered slow. Simply removing all JIT compilers would probably be better for security, but vendors understandably don’t want to make their products slower; like it or not, JIT compilers in browsers are probably not going to all be removed any time soon.

To improve performance, when JS is being run by a baseline interpreter, JIT compilers look for portions of JS code that are being run frequently. In JIT terminology, such portions are referred to as hot. Imagine JS code as machinery that gets hotter and hotter the more it’s used. Once a usage threshold is reached, JIT compilers will consider some JS (for example, a function) to be hot and will strive to create a more optimized version of this code by emitting a machine code (that is, assembly) version of the hot JS function, which will be much faster to execute than the bytecode version that is run by the baseline interpreter. In future usage of the code, the optimized JIT compiled version will be used instead of the bytecode version. However, this optimization process may introduce vulnerabilities, and we’ll take a look at examples in later sections of this post.

At this point, you might be wondering Why don’t we just optimize everything? If the baseline interpreter is slow and JIT compiled code is fast, then why not always JIT compile all the code, all the time? What’s the point in using the interpreter, aside from avoiding the complexity of implementing a JIT compiler?

The answer is that JIT compilation is computationally expensive. Generating optimized code actually incurs a performance cost at first. This is why JIT compilers specifically look for hot code to optimize: because this code is getting used a lot, there’s a good chance that it’ll continue to be used after the JIT compiler has optimized it. Over time, the performance gains provided by the optimized code will outweigh the initial computational cost of generating the optimized code, leading to a net performance improvement.

To help make this clear, let’s consider an analogy:

Imagine you run a pizza restaurant. Every week, the same group of people show up at the same time and place exactly the same order. They always show up during rush hour, so you’re spread thin and their order takes a while to prepare. You notice that these people have been showing up consistently every week for a year and have always made the same order. In an effort to make rush hour less painful and get these folks their food more quickly, you decide to go ahead and make their food a bit in advance, so that when they show up, it’ll be ready for them and you’ll have more resources during rush hour. (This does mean their food would be sitting around for a while and you’d need to keep it warm, but don’t think too hard about all that.)

If this approach works, you’ll start getting some efficiency gains every week. However, there’s always the risk that the group just doesn’t show up one week, or that they show up but unexpectedly order something different, meaning your work and resources have been wasted. However, because the group has shown up so consistently for a whole year, you decide that the likely performance gains outweigh the possible cost of wasted food and time.

This is basically the role of a JIT compiler — watch for code that’s getting used frequently, take an initial performance hit to generate a more optimized version of the code, and then hope that the code continues to get used, leading to performance gains over time. To generate optimized code, the JIT compiler needs to make some assumptions about how the code will be used, and if the assumptions end up being wrong, the optimized code might not be usable, sort of like the case where the group in the analogy shows up but orders something unexpected. We’ll discuss this more in a bit.

Note that this is a dramatic simplification of JS engine architecture. One detail to consider is that modern browsers actually have more than one JIT compiler; they have multiple JIT compilers that perform different levels of optimization, with the goal of performing minor, less expensive optimizations for code that isn’t going to be used as frequently as the code that gets the more expensive but very efficient optimizations. For example, as of the time of this writing, V8 has its baseline interpreter Ignition, and then three different JIT compilers: Sparkplug, Maglev, and TurboFan. Each “tier” of compiler generates more optimized code than the previous one. The lower tiers are useful for optimizing code that’s used more than once, but not often enough to justify using the more expensive TurboFan tier.

In-depth review of these different JIT tiers is beyond the scope of this post. For the purposes of this discussion, we’ll just focus on the highest tier of JIT compiler (responsible for emitting the most optimized machine code), which in the case of V8 is TurboFan. Just keep in mind that these multiple distinct JIT compilers exist, so when hunting for vulnerabilities, you’ll want to consider more than just one JIT compiler.

Hands-on with JIT compilation

Okay, so now we’ve got a basic idea of the role of JIT compilers in browsers. Let’s get more specific now. What does this process look like? What types of vulnerabilities does it introduce?

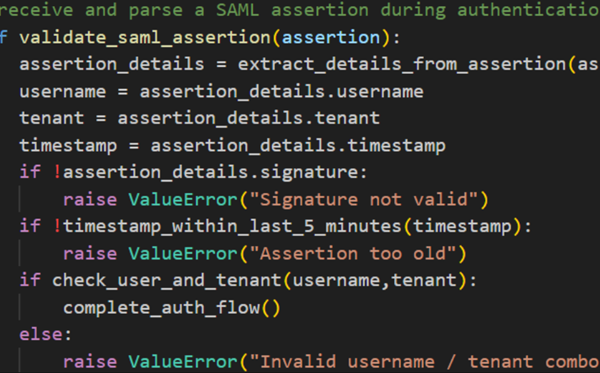

Compiling JS to machine code is difficult due to the lack of type information. JavaScript is not a strongly typed language, meaning that variables can change data types — for example, from an integer to a string. When generating assembly, though, we need to be careful not to handle something as the wrong type, because this can lead to security problems (we’ll discuss this in further detail shortly).

So if JS allows type changing, but accurate type information is needed to emit optimized machine code, how do we figure out the types? For example, if some parameters get passed to a function, how do we know what types those will be? They could be anything. JIT compilers solve this by speculating what the types are likely to be. They can do this by observing what the types have been in the past (a process called profiling) and speculating that they are likely to be the same in the future. The JIT compiler will then emit optimized machine code based on those speculations.

Let’s examine this process a bit. Examples throughout this post will use D8, the standalone version of V8. You are encouraged to compile D8 yourself so that you can follow along. To do so, you can follow the official documentation here: https://v8.dev/docs/build

Additionally, I will be using GDB with the GEF (https://github.com/hugsy/gef) extension for debugging.

To begin, let’s run D8 in GDB with two special arguments, –allow-natives-syntax and –trace-turbo.

–allow-natives-syntax enables some useful debugging functions that are not normally available.

–trace-turbo will be useful later for examining the JIT compilation process.

|

1 |

gdb --args ./d8 --allow-natives-syntax --trace-turbo |

Upon running D8, you’ll observe that you’re simply given an interactive shell. This is because we’re only interacting with the JavaScript engine rather than a full browser. This simplifies things a lot by allowing us to just investigate the components we’re interested in. After running D8, let’s begin by creating a simple addition function that takes two arguments:

|

1 2 |

d8> function simple_add(arg1,arg2){return arg1 + arg2} undefined |

Now we’ll use one of the functions made available by –allow-natives-syntax, which is %DebugPrint. This function will show a variety of information about the function:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

d8> %DebugPrint(simple_add); DebugPrint: 0x32df00199589: [Function] - map: 0x32df00182215 <Map[32](HOLEY_ELEMENTS)> [FastProperties] - prototype: 0x32df0018213d <JSFunction (sfi = 0x32df001418a1)> - elements: 0x32df00000745 <FixedArray[0]> [HOLEY_ELEMENTS] - function prototype: - initial_map: - shared_info: 0x32df00199501 <SharedFunctionInfo simple_add> - name: 0x32df00199459 <String[10]: #simple_add> - builtin: CompileLazy - formal_parameter_count: 3 - kind: NormalFunction - context: 0x32df00181a85 <NativeContext[301]> - code: 0x32df000346a9 <Code BUILTIN CompileLazy> - dispatch_handle: 0x264800 - source code: (arg1,arg2){return arg1 + arg2} - properties: 0x32df00000745 <FixedArray[0]> - All own properties (excluding elements): { 0x32df00000d91: [String] in ReadOnlySpace: #length: 0x32df000262f9 <AccessorInfo name= 0x32df00000d91 <String[6]: #length>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [__C]), location: descriptor 0x32df00000dbd: [String] in ReadOnlySpace: #name: 0x32df000262e1 <AccessorInfo name= 0x32df00000dbd <String[4]: #name>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [__C]), location: descriptor 0x32df000042d9: [String] in ReadOnlySpace: #arguments: 0x32df000262b1 <AccessorInfo name= 0x32df000042d9 <String[9]: #arguments>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [___]), location: descriptor 0x32df00004559: [String] in ReadOnlySpace: #caller: 0x32df000262c9 <AccessorInfo name= 0x32df00004559 <String[6]: #caller>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [___]), location: descriptor 0x32df00000da5: [String] in ReadOnlySpace: #prototype: 0x32df00026311 <AccessorInfo name= 0x32df00000da5 <String[9]: #prototype>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [W__]), location: descriptor } - feedback vector: feedback metadata is not available in SFI 0x32df00182215: [Map] - map: 0x32df00181a35 <MetaMap (0x32df00181a85 <NativeContext[301]>)> - type: JS_FUNCTION_TYPE - instance size: 32 - inobject properties: 0 - unused property fields: 0 - elements kind: HOLEY_ELEMENTS - enum length: invalid - stable_map - callable - constructor - has_prototype_slot - back pointer: 0x32df00000011 <undefined> - prototype_validity cell: 0x32df00000a81 <Cell value= 1> - instance descriptors (own) #5: 0x32df0018223d <DescriptorArray[5]> - prototype: 0x32df0018213d <JSFunction (sfi = 0x32df001418a1)> - constructor: 0x32df001821e1 <JSFunction Function (sfi = 0x32df0002b721)> - dependent code: 0x32df00000755 <Other heap object (WEAK_ARRAY_LIST_TYPE)> - construction counter: 0 |

There’s a lot of information there and you don’t need to dig into most of it. However, you may want to note the line with the code pointer:

|

1 |

- code: 0x32df000346a9 <Code BUILTIN CompileLazy> |

For now it doesn’t mean much, but let’s cause our function to be JIT compiled and compare the debug output for the JITed version with the original bytecode version. Remember how the JIT compiler looks for code it considers hot, AKA code that’s used frequently? Let’s just run our function a ton of times in a loop to make it hot enough for the JIT compiler to optimize it (there’s also a way to do this with some of the functions created with –allow-natives-syntax, and we’ll see that in a moment):

|

1 |

d8> for (i = 0; i < 0x20000; i++){simple_add(1,2)} |

Great! If you’re following along on your own machine, you may have noticed some output showing what the JIT compiler is doing; this is due to the –trace-turbo argument we provided to d8 earlier.

Now that it’s been identified as hot and JITed, let’s take a look at the function again using %DebugPrint:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

d8> %DebugPrint(simple_add) DebugPrint: 0x32df00199589: [Function] - map: 0x32df00182215 <Map[32](HOLEY_ELEMENTS)> [FastProperties] - prototype: 0x32df0018213d <JSFunction (sfi = 0x32df001418a1)> - elements: 0x32df00000745 <FixedArray[0]> [HOLEY_ELEMENTS] - function prototype: - initial_map: - shared_info: 0x32df00199501 <SharedFunctionInfo simple_add> - name: 0x32df00199459 <String[10]: #simple_add> - formal_parameter_count: 3 - kind: NormalFunction - context: 0x32df00181a85 <NativeContext[301]> - code: 0x23f700040ca1 <Code TURBOFAN_JS> - dispatch_handle: 0x264800 - source code: (arg1,arg2){return arg1 + arg2} - properties: 0x32df00000745 <FixedArray[0]> - All own properties (excluding elements): { 0x32df00000d91: [String] in ReadOnlySpace: #length: 0x32df000262f9 <AccessorInfo name= 0x32df00000d91 <String[6]: #length>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [__C]), location: descriptor 0x32df00000dbd: [String] in ReadOnlySpace: #name: 0x32df000262e1 <AccessorInfo name= 0x32df00000dbd <String[4]: #name>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [__C]), location: descriptor 0x32df000042d9: [String] in ReadOnlySpace: #arguments: 0x32df000262b1 <AccessorInfo name= 0x32df000042d9 <String[9]: #arguments>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [___]), location: descriptor 0x32df00004559: [String] in ReadOnlySpace: #caller: 0x32df000262c9 <AccessorInfo name= 0x32df00004559 <String[6]: #caller>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [___]), location: descriptor 0x32df00000da5: [String] in ReadOnlySpace: #prototype: 0x32df00026311 <AccessorInfo name= 0x32df00000da5 <String[9]: #prototype>, data= 0x32df00000011 <undefined>> (const accessor descriptor, attrs: [W__]), location: descriptor } - feedback vector: 0x32df0019a4b9: [FeedbackVector] - map: 0x32df00000801 <Map(FEEDBACK_VECTOR_TYPE)> - length: 1 - shared function info: 0x32df00199501 <SharedFunctionInfo simple_add> - tiering_in_progress: 0 - osr_tiering_in_progress: 0 - invocation count: 402 - closure feedback cell array: 0x32df0000216d: [ClosureFeedbackCellArray] in ReadOnlySpace - map: 0x32df000007d9 <Map(CLOSURE_FEEDBACK_CELL_ARRAY_TYPE)> - length: 0 - elements: - slot #0 BinaryOp BinaryOp:SignedSmall { [0]: 1 } 0x32df00182215: [Map] - map: 0x32df00181a35 <MetaMap (0x32df00181a85 <NativeContext[301]>)> - type: JS_FUNCTION_TYPE - instance size: 32 - inobject properties: 0 - unused property fields: 0 - elements kind: HOLEY_ELEMENTS - enum length: invalid - stable_map - callable - constructor - has_prototype_slot - back pointer: 0x32df00000011 <undefined> - prototype_validity cell: 0x32df00000a81 <Cell value= 1> - instance descriptors (own) #5: 0x32df0018223d <DescriptorArray[5]> - prototype: 0x32df0018213d <JSFunction (sfi = 0x32df001418a1)> - constructor: 0x32df001821e1 <JSFunction Function (sfi = 0x32df0002b721)> - dependent code: 0x32df00000755 <Other heap object (WEAK_ARRAY_LIST_TYPE)> - construction counter: 0 |

Did you spot some differences in the output? Here, take a look at the code pointer for the JITed function:

|

1 |

- code: 0x23f700040ca1 <Code TURBOFAN_JS> |

Note that we’re pointing to a different place in memory and there’s now a reference to TurboFan. Additionally, if you take a look at the contents of the directory from which you ran D8, you’ll see that there’s now a JSON file for the function we just ran! In my case, it’s called turbo-simple_add-1.json.

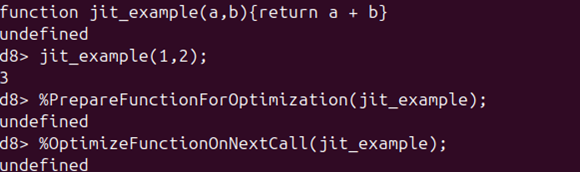

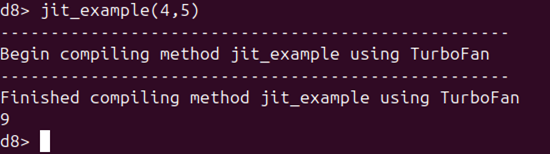

In a real exploit, this is the way we’d force some code to be JITed, but if you don’t want to have to write a loop every time you want some code identified as hot and therefore JITed, you can also use some functions provided by the –allow-natives-syntax argument. Specifically, after defining a function, you can use the %PrepareFunctionForOptimization() and %OptimizeFunctionOnNextCall() functions. This will allow the function to be JITed the very next time it is called. The following screenshots illustrate this process:

So we’ve JITed a function. What now? We probably want to see what happens under the hood when this operation takes place, since that’ll be key to eventually understanding the types of vulnerabilities that can be introduced here. To do this, let’s check out a tool called Turbolizer.

Turbolizer

To analyze what TurboFan is doing during the JIT compilation process, we’ll use Turbolizer, a tool that displays TurboFan’s various stages in graph form. Turbolizer can be combined with the –trace-turbo CLI argument for D8. This argument emits a JSON file that can be examined using Turbolizer to visualize TurboFan’s optimization stages. We’ve already generated a JSON file in the previous section for our simple_add() function.

After grabbing the V8 source, you can build and run Turbolizer. The documentation here explains the process:

https://chromium.googlesource.com/v8/v8/+/refs/heads/main/tools/turbolizer/

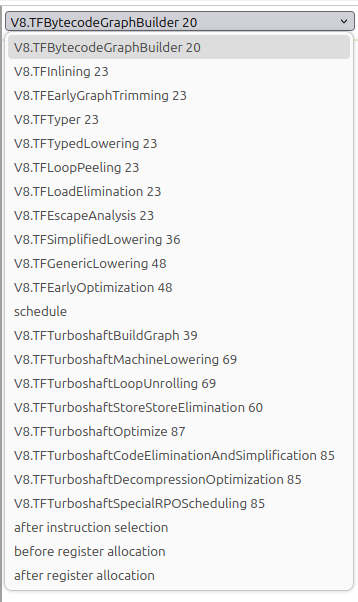

Once we’ve got Turbolizer set up and listening, we can upload the JSON file for our function. Upon doing so, we’ll be presented with some information about our function, including a drop-down menu that shows a bunch of different graph view options. What are these?

As part of its optimization process, TurboFan converts the code it intends to optimize into an intermediate representation (IR). The IR in this case is a graph using an idea from compiler theory called “Sea of Nodes”; you can read more about that theory here: https://darksi.de/d.sea-of-nodes/

However, I don’t know about you, but compiler theory is getting dangerously close to Smart People territory for my tastes. Rather than try to understand all of that first, let’s focus on just getting enough to make some sense of what we’re seeing in Turbolizer, and add on to our knowledge as we do hands-on work. In very simplified terms, TurboFan will convert the unoptimized code into “nodes”, which are part of the IR. The IR is then easier to work with for performing optimization. Turbolizer lets us view the generated IR, and the various steps performed during optimization, as a graph.

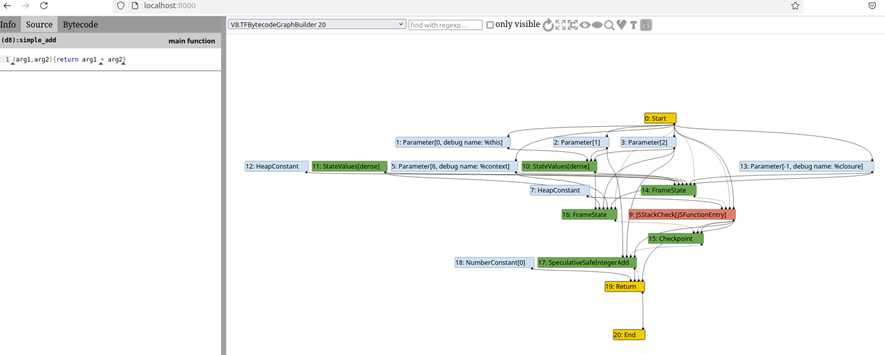

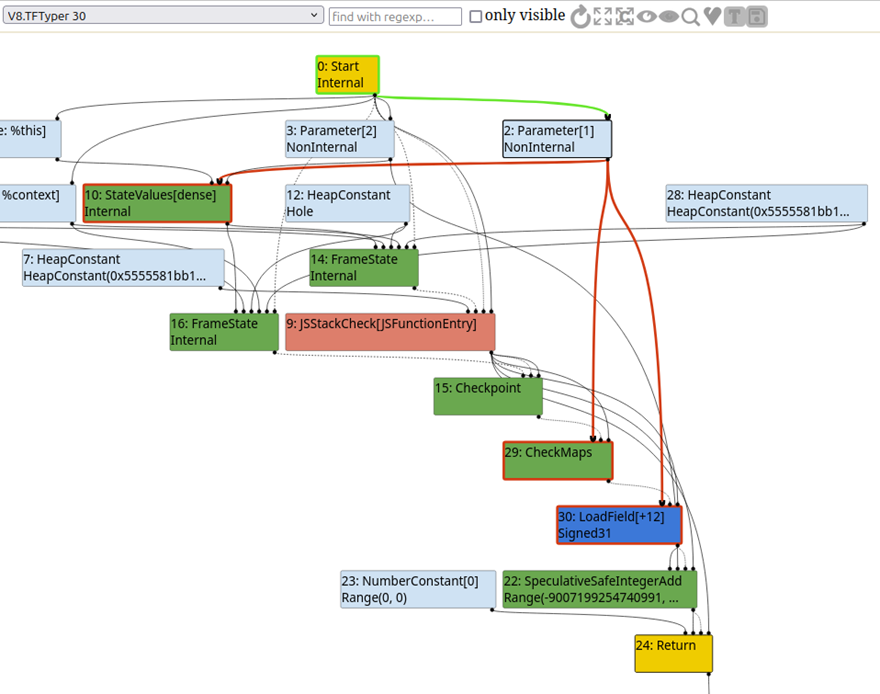

What does this actually look like? Here’s a screenshot of the V8.TFBytecodeGraphBuilder 20 graph, which is the first graph available, after I’ve clicked on the arrows on the left-hand side in the simple_add JS source (or you can use the “show all nodes” button):

There’s a lot going on here, but chances are that we don’t need to understand every single part of this graph, at least not right away. Of note is that Parameter[1] and Parameter[2] sound like they’re probably the arguments getting passed to the function, and that node labeled SpeculativeSafeIntegerAdd sounds pretty interesting.

Currently, our view is set to V8.TFBytecodeGraphBuilder, which is the first of many possible graph views. These views show phases performed during JIT compilation. These phases relate to the distinct rounds of optimization applied by TurboFan. By examining the different graph views, we can see what TurboFan did during a specific optimization phase. This is important, as you may want to trigger a bug during a specific phase (see https://www.jaybosamiya.com/blog/2019/01/02/krautflare/, https://abiondo.me/2019/01/02/exploiting-math-expm1-v8/ and the associated bug https://project-zero.issues.chromium.org/issues/42450781 for an example). The following post contains a bit more information about some of the TurboFan optimization phases: https://www.zerodayinitiative.com/blog/2021/12/6/two-birds-with-one-stone-an-introduction-to-v8-and-jit-exploitation

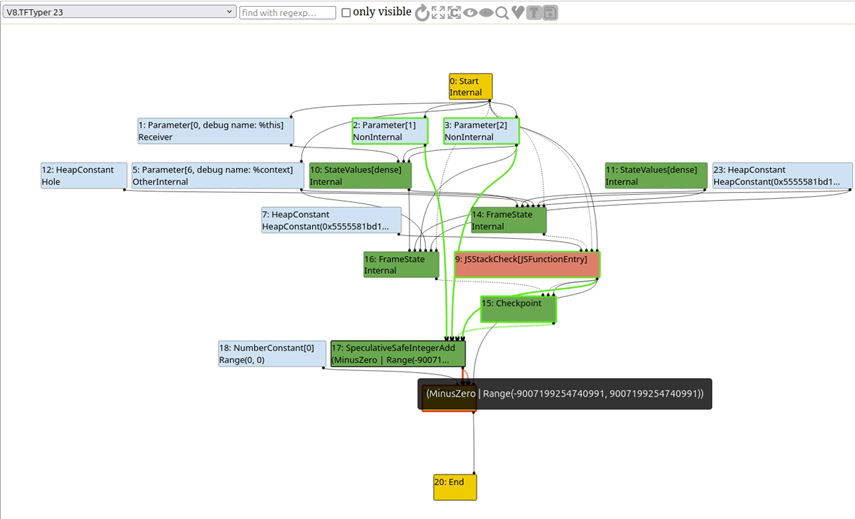

Discussing every TurboFan phase available in Turbolizer would be far more comprehensive than this blog post aims to be (and I’m still learning about them myself and don’t want to provide inaccurate information), but there are a couple phases worth calling out. The graph builder phase is actually not an optimization phase and is more of a setup phase. The typer phase is of interest, which is responsible for determining expected types to be used/returned by the optimized function. If we take a look at the typer phase in Turbolizer and enable the “toggle types” option, we can see that the typer has determined that the output of the function should be the return value of a SpeculativeSafeIntegerAdd within a specific range (the max sizes for a small integer in V8).

As you dig into these optimization phases, you should examine the source code implementing them. For example, the v8/src/compiler/turbofan-typer.cc and v8/src/compiler/operation-typer.cc files are related to the TurboFan typer phase (as of the time of this writing; the file names or locations could change in the future). For example, if you check out operation-typer.cc, you can find the implementation of SpeculativeSafeIntegerAdd:

|

1 2 3 4 5 6 7 8 9 |

Type OperationTyper::SpeculativeSafeIntegerAdd(Type lhs, Type rhs) { Type result = SpeculativeNumberAdd(lhs, rhs); // If we have a Smi or Int32 feedback, the representation selection will // either truncate or it will check the inputs (i.e., deopt if not int32). // In either case the result will be in the safe integer range, so we // can bake in the type here. This needs to be in sync with // SimplifiedLowering::VisitSpeculativeAdditiveOp. return Type::Intersect(result, cache_->kSafeIntegerOrMinusZero, zone()); } |

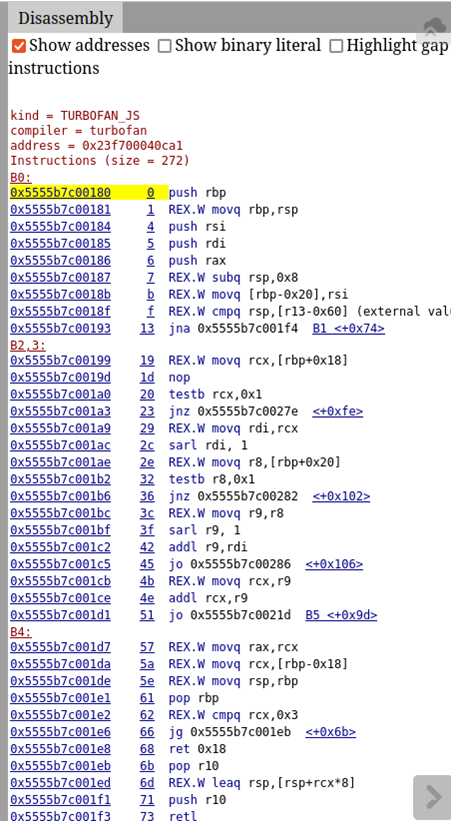

Helpfully, Turbolizer also has a right-hand panel that shows the assembly code emitted by TurboFan, and includes the ability to display the addresses at which the code is located:

These addresses can be used to examine the assembly code in memory in the d8 process we’re debugging. Let’s hop back over to GDB and give that a try. In GDB, we can first set a break point on the first instruction address provided by Turbolizer:

|

1 |

break *0x5555b7c00180 |

Now that we’ve JITed our function, we can invoke it again and should hit our breakpoint that’s set at the beginning of the optimized code emitted by TurboFan:

|

1 2 3 |

d8> simple_add(1,2); Thread 1 "d8" hit Breakpoint 1, 0x00005555b7c00180 in ?? () |

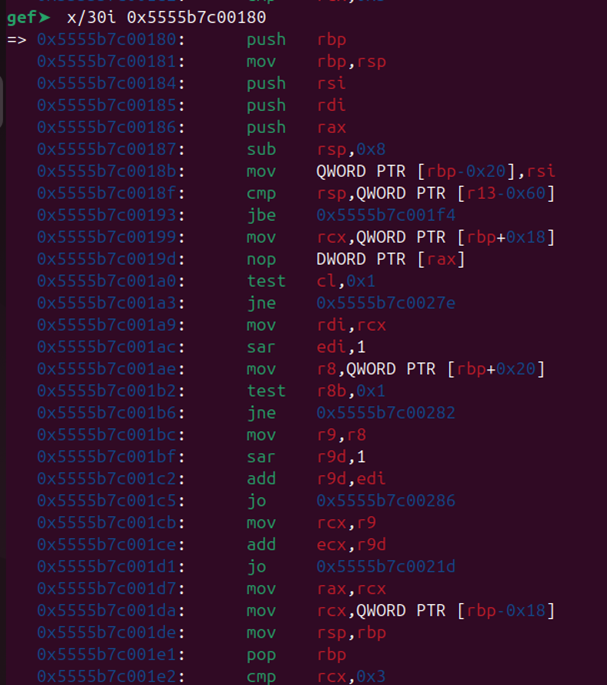

Great! We can now view some of the instructions beginning at this address; let’s check out the next 30 with the following command:

|

1 |

x/30i 0x5555b7c00180 |

It’s now possible to single-step through the JITed code to get a feel for it. However, we just called our function exactly as expected, with integers as arguments. What happens if we call our function with some other argument type? If the code was optimized based on the speculation that the arguments would be integers, then surely a different argument type would be bound to cause problems, right? Let’s take a look at what happens by running our function again, but providing strings as arguments this time:

|

1 2 3 |

d8> simple_add("1","2"); Thread 1 "d8" hit Breakpoint 1, 0x00005555b7c00180 in ?? () |

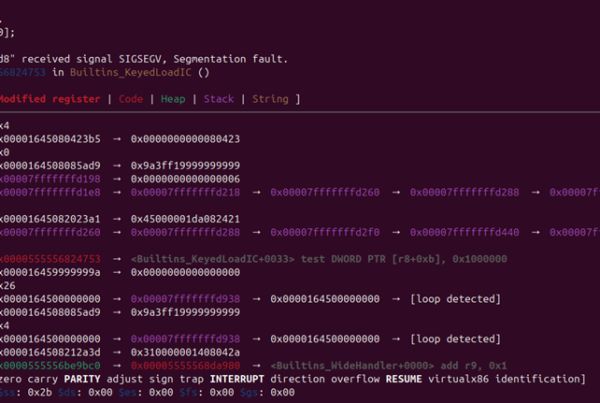

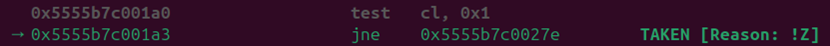

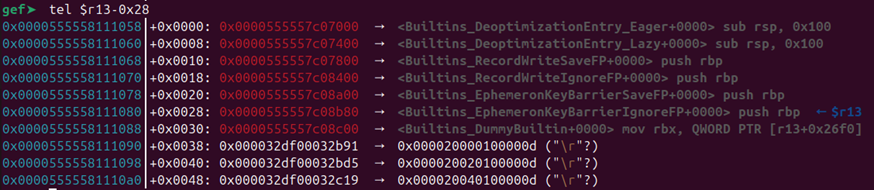

Note that there’s an interesting check being applied by the JITed code in which the cl register is tested against 0x1, and then there’s a conditional jump:

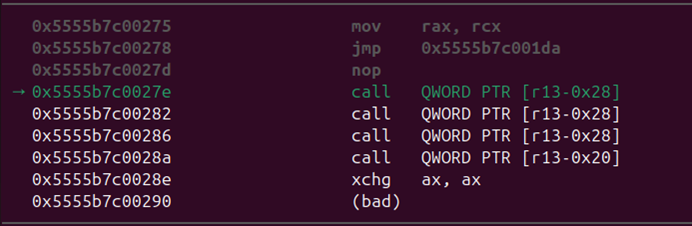

This check’s jump was not taken when we provided integers as arguments, but after providing strings, it is. Let’s single-step and see where this conditional jump takes us:

Looks like we’ve got a call that dereferences something relative to the r13 register. Okay, so let’s take a look at what resides at that pointer:

Hm, so we’re going to call something called Builtins_DeoptimizationEntry_Eager. Isn’t the whole point of JIT compilation optimization? So what’s with this deoptimization thing? That sounds like it’s the opposite of what we’d expect. We’ll talk about this more in the next section. We’ll also return to Turbolizer afterward to make sense of this.

So now we know a little bit about the optimization process performed by JIT compilers and have seen some of the phases used by TurboFan. We’ve also seen some checks to ensure that the arguments provided to our function really are the expected type. This is important, and failure to ensure the correct types are used can lead to vulnerabilities. Now that we’ve finally gotten a basic understanding of JIT compilers, let’s start talking about some vulnerabilities that can occur in them.

Common JIT compiler vulnerabilities

In the previous sections, we learned that JIT compilers determine the types of variables/arguments used in code to be optimized by profiling to see what types have been used in the past, then speculating about which ones are likely to be used in the future.

But what if the speculations made don’t hold true? For example, what if the type of a parameter provided to a function is different than the one the JIT compiler has speculated it will be? If there’s no system in place to deal with this possibility, then the JITed code could end up treating a parameter as an incorrect type — an object could be treated as a different kind of object. This is a class of bug referred to as a type confusion; it’s not specific to JIT compilers, but does commonly occur in them.

To help prevent this situation, the JIT compiler introduces “speculation guards” into its emitted machine code. The purpose of these guards is to verify that the speculations hold true. If they do, then it’s safe to proceed to execute the machine code emitted by the JIT compiler. However, if the check performed by the speculation guards reveals that the speculations are inaccurate, then a deoptimization bailout is triggered; this just means that the fast machine code is not suitable / safe to use in this case, so execution will revert to the slower bytecode instead. It’s slow, but it’s safe. (TurboFan knows how to return to the original bytecode version of the function by preparing some deoptimization data during its code generation; this blog post contains some information about this process: https://www.l3harris.com/newsroom/editorial/2023/10/permalink-modern-attacks-chrome-browser-optimizations-and)

So now the JITed code we stepped through in the last section is starting to make more sense. When we provided integers as arguments, as we did when we made our function hot, the optimized code behaved as expected, because the profiling suggested that integers would always be used. When we switched to using strings, though, the JITed code was no longer suitable. We encountered a speculation guard that checked the argument type. When it found that the type was not an integer, a deoptimization bailout was triggered, leading us to run the slower bytecode instead.

Let’s take a look at this in Turbolizer, but with something a little more interesting than a function that just adds two integers. What if we add a property of an object and an integer instead? Let’s create that function and JIT it:

|

1 2 3 4 5 |

d8> test_object = {x:1} {x: 1} d8> function object_jit(arg1,arg2){return arg1.x + arg2} undefined d8> for (i = 0; i < 0x200000; i++){object_jit(test_object,2)}; |

After JIT compiling the function, we can upload the emitted JSON file to Turbolizer. Let’s take a look at the typer phase with all nodes displayed and the type information displayed:

Notice how Parameter[1], which is the first argument passed to our function, has a CheckMaps node related to it? This is a check to ensure that the object provided in this argument is of the expected type. In V8, a map is basically some metadata about an object (this concept exists in other JS engines too, it just goes by different names; in Spidermonkey, Firefox’s JS engine, this is called a shape). A map will be shared by different objects if they have the same number of properties and the same property names, even if the values of the properties are different. This is a very brief overview of this topic, and I strongly recommend you check out this excellent blog post that examines this topic in much greater detail: https://mathiasbynens.be/notes/shapes-ics

For our purposes, all we really need to understand right now is that the map of an object pretty much represents its type, and this check is there to ensure that the type has not changed. If the map of the object we provide in argument 1 of object_jit() is in any way different than the one for test_object, a deoptimization bailout will be triggered. For example, the following code leads to a bailout, because even though the object we provide does have a property called x, it doesn’t have the same map, since it doesn’t have a matching number of properties:

|

1 2 3 |

d8> newobj = {x:1,y:2} {x: 1, y: 2} d8> object_jit(newobj,2) |

You’re encouraged to actually try setting a breakpoint on the JITed code and observing this speculation guard and bailout yourself.

Okay, so we now understand that JIT compilers try to avoid issues like type confusions by using speculation guards. But obviously, vulnerabilities do still occur, so what causes them? A variety of bugs are possible, but we’re going to focus on two that are outlined by Samuel Groß, AKA saelo, in his excellent “Attacking Client-Side JIT Compilers (v2)” presentation from BlackHat 2018 (https://saelo.github.io/presentations/blackhat_us_18_attacking_client_side_jit_compilers.pdf). You are very much encouraged to read this (and if you’re interested in browser security, you should probably follow saelo’s work in general). I’m just providing a brief overview of some concepts he covers in greater detail.

In particular, in his presentation, saelo mentions two major vulnerability classes that are caused by optimizations applied by the JIT compiler: bounds check elimination and redundancy elimination.

Redundancy elimination relates to removing speculation guards that perform checks that have already been performed earlier — in other words, removing redundant speculation guards. If a check has already been performed once and it’s not possible for anything relevant to change between that original check and the redundant one, then the redundant check is just impacting performance without adding any security benefit. However, you may have noticed that the statement “and it’s not possible for anything relevant to change between that original check and the redundant one” is doing a lot of work in that sentence.

A JIT compiler tries to discern whether a check is redundant through a process called side effect modeling. For example, it might try to decide whether a type-checking speculation guard is redundant by using side effect modeling to see if there’s any way that an object’s type could be changed during execution of the code between the initial speculation guard and the potentially redundant one. If the side effect modeling determines that the object type cannot be changed, then the redundant speculation guard can be safely removed.

Unless, of course, the side effect modeling is simply incorrect! By finding a case the JIT compiler didn’t consider, an attacker could unexpectedly change the object’s type to cause a type confusion. Because the speculation guard has been removed, there’s no longer any protection in place to detect this and bail out. As a result, this will likely lead to memory corruption, which may be exploitable. If you’re interested in seeing an example of a real-world (and very complex, at least for me) improper side effect modeling vulnerability, you can check out the following blog post: https://github.blog/security/vulnerability-research/getting-rce-in-chrome-with-incorrect-side-effect-in-the-jit-compiler/

Bounds check eliminations are similar in that they also rely on inaccuracy from the JIT compiler. As an example, if the function to be JITed accesses an index of an array, the JITed code needs to make sure that the index is within the bounds of the array. This could be accomplished by including a speculation guard to ensure the index really is within bounds. However, if the compiler thinks it’s able to prove that the index value will always be within bounds, then this speculation guard is impacting performance unnecessarily, so it can be eliminated. Just like with redundancy elimination, this means the compiler needs to be accurately calculating whether the index can ever be a value outside the array bounds. This could happen due to an integer overflow like the one demonstrated in this Exodus Intelligence blog post about a vulnerability in Safari: https://blog.exodusintel.com/2023/07/20/shifting-boundaries-exploiting-an-integer-overflow-in-apple-safari/#Vulnerability (Note that this technique is old and in V8, hardening has since been applied; I think this scenario helps illustrate the general idea, so I’ve kept it, but if you’re interested in the hardening that’s been applied, you can read about it here: https://doar-e.github.io/blog/2019/05/09/circumventing-chromes-hardening-of-typer-bugs/)

Why JIT bugs? Why not focus on the interpreter instead?

In an earlier section, I mentioned that vulnerabilities can occur in the baseline interpreter, not only in the JIT compiler. In fact, users can opt to disable the JIT compiler (though by default they’re enabled), but disabling the baseline interpreter is a lot less practical, because that means you’re turning off JavaScript support entirely. You can do this, of course, but much of the modern web will become basically unusable without JavaScript.

With that in mind, wouldn’t the interpreter be an even better target than the JIT compiler, since the JIT compiler is optional and the baseline interpreter is (practically speaking) really not? Why focus on the JIT compiler?

The baseline interpreter is definitely an important target as well, but JIT compilers are responsible for a lot of the vulnerabilities in browsers. The following Microsoft blog post from 2021 asserts that “data after 2019 shows that roughly 45% of CVEs issued for V8 were related to the JIT engine”:

https://microsoftedge.github.io/edgevr/posts/Super-Duper-Secure-Mode/

That blog post also links to the following analysis from Mozilla, which highlights how frequently JIT bugs are exploited: https://docs.google.com/spreadsheets/d/1FslzTx4b7sKZK4BR-DpO45JZNB1QZF9wuijK3OxBwr0/edit?gid=0#gid=0

That data is admittedly a little outdated now, but as far as I’m aware, JIT bugs have remained the primary arena for browser exploitation. There are a couple of contributing factors to this. First, JIT compilers are complex! Complex subsystems often tend to be vulnerable subsystems.

The second and more interesting point to discuss is that JIT bugs aren’t really traditional memory corruption bugs. They’re more logic bugs that happen to lead to memory corruption as a side effect. Recall that JIT bugs often involve things like the compiler deciding that a bounds check or type check isn’t necessary and opting to remove it. Even if this attack surface were rewritten in a memory-safe language, these logic bugs could still exist. If you’re interesting in learning a bit more about why JIT compiler bugs are so hard to solve, this presentation (also from saelo) is a great watch: OffensiveCon24 – Samuel Groß – The V8 Heap Sandbox

Finally, JIT bugs also seem to frequently lead to powerful exploit primitives that can be “easily” (compared to some types of vulnerabilities, anyway) converted to the primitives needed to achieve a full exploit.

For example, we’ve mentioned that two common JIT bugs are bugs that lead to access outside intended bounds and bugs that lead to type confusions. An out-of-bounds (OOB) write may allow corrupting a heap object adjacent to the vulnerable one. JS affords an attacker a lot of control over what objects get allocated, so it’s likely you could choose a promising victim object to place next to the vulnerable one and then use the OOB write to corrupt something valuable in the victim object (like a length value, for example, in order to achieve a much less constrained OOB read/write primitive). Type confusions can often be massaged into eventually being OOB reads/writes.

Compare this with something like a linear overflow from a heap object (whose type you don’t get to choose) in some specific heap bin where you’re limited to a small number of other victim object types. A bug like this may certainly still be exploitable, but this could take a lot more work to exploit and might even need to be chained with another bug.

JIT bugs: a case study

At this point you may be thinking We’ve been talking about JIT compilers for so, so long. Can we please see an example bug so this can make sense and this super long blog post can finally be over? Let’s take a look at a very simple CTF TurboFan vulnerability. We will not be developing a full exploit for it; however, we’ll be stepping through it in enough detail to at least understand how to trigger the bug and why it occurs.

We’re going to take a look at an old PicoCTF challenge called Turboflan. The files for it are available here: https://play.picoctf.org/practice/challenge/178?page=1&search=turboflan

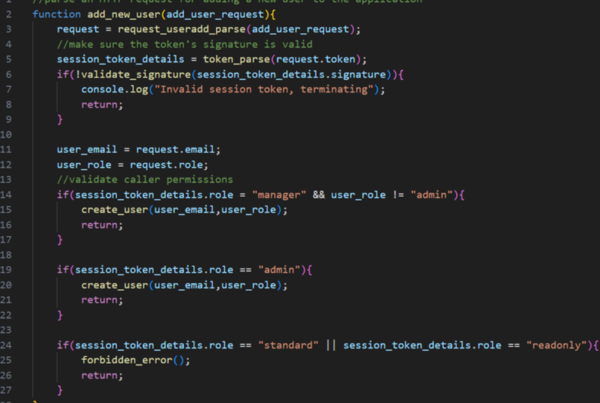

We’re given a build of D8 and a patch file, among other things. Patch analysis is a good starting point. The relevant portion of the patch is pretty small and even has a helpful comment to draw our attention to it (note that this challenge is from 2021, and the file being patched doesn’t seem to be present in the same place in 2025):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

--- a/src/compiler/effect-control-linearizer.cc +++ b/src/compiler/effect-control-linearizer.cc @@ -1866,8 +1866,9 @@ void EffectControlLinearizer::LowerCheckMaps(Node* node, Node* frame_state) { Node* map = __ HeapConstant(maps[i]); Node* check = __ TaggedEqual(value_map, map); if (i == map_count - 1) { - __ DeoptimizeIfNot(DeoptimizeReason::kWrongMap, p.feedback(), check, - frame_state, IsSafetyCheck::kCriticalSafetyCheck); + // This makes me slow down! Can't have! Gotta go fast!! + // __ DeoptimizeIfNot(DeoptimizeReason::kWrongMap, p.feedback(), check, + // frame_state, IsSafetyCheck::kCriticalSafetyCheck); } else { auto next_map = __ MakeLabel(); __ BranchWithCriticalSafetyCheck(check, &done, &next_map); @@ -1888,8 +1889,8 @@ void EffectControlLinearizer::LowerCheckMaps(Node* node, Node* frame_state) { Node* check = __ TaggedEqual(value_map, map); if (i == map_count - 1) { - __ DeoptimizeIfNot(DeoptimizeReason::kWrongMap, p.feedback(), check, - frame_state, IsSafetyCheck::kCriticalSafetyCheck); + // __ DeoptimizeIfNot(DeoptimizeReason::kWrongMap, p.feedback(), check, + // frame_state, IsSafetyCheck::kCriticalSafetyCheck); } else { auto next_map = __ MakeLabel(); __ BranchWithCriticalSafetyCheck(check, &done, &next_map); |

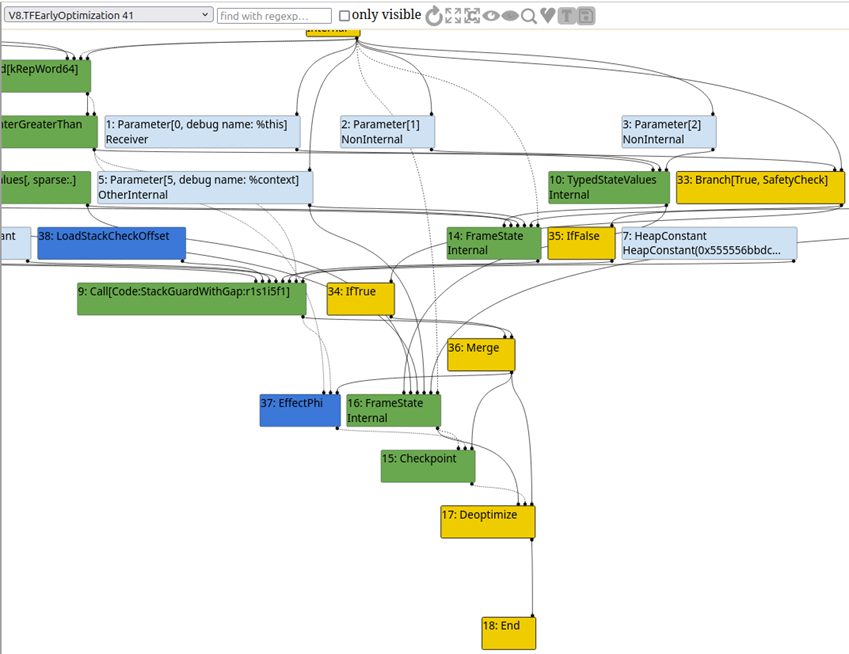

So the patch is removing a line that mentions “DeoptimizeReason::kWrongMap”. Remember how we examined a speculation guard earlier that would check an object’s map and ensure it wasn’t different than expected? Well, now that check is gone. A good starting point to understand what this looks like would be to write some code that should involve a map check for a 2025 build of D8 and take a look at the JIT process in Turbolizer, then try the same code on this patched, intentionally vulnerable version of D8 and compare the JIT output.

We’ve actually already done the first half earlier in this blog post. As a reminder, here’s the code we used:

|

1 2 3 4 |

d8> test_object = {x:1} {x: 1} d8> function object_jit(arg1,arg2){return arg1.x + arg2} undefined |

After calling object_jit() enough times for TurboFan to compile it, we can examine the emitted JSON file in Turbolizer. Observe that Parameter[1], which is our test_object.x property, eventually receives a CheckMaps check to ensure it’s still got the expected map (which in other words means we’re checking to ensure the object still has the expected type):

Great, so we know how this should look in a modern build. Let’s try running the CTF build of D8 with the –allow-natives-syntax and –trace-turbo arguments, writing the same code, and getting a JSON file emitted.

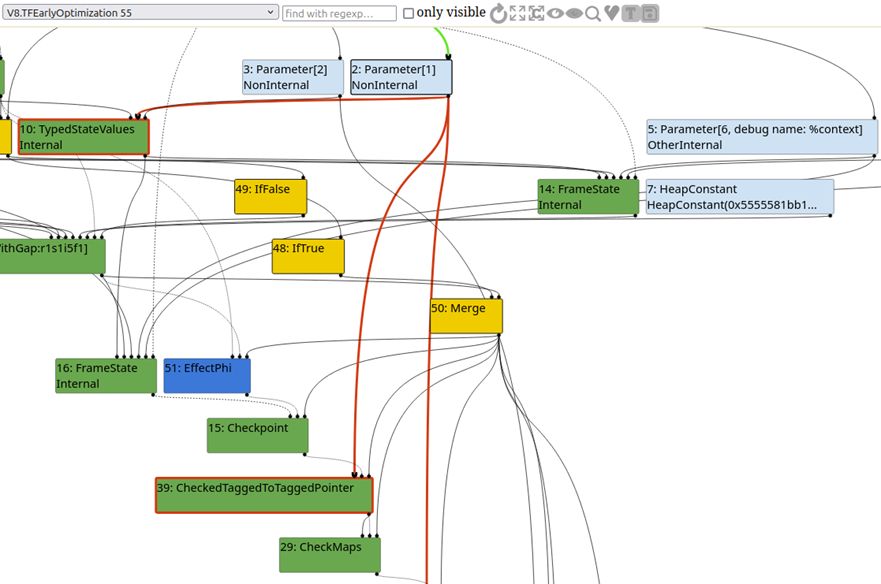

Upon loading up that JSON file in Turbolizer, we can observe that a CheckMaps node is not present:

At this point, we can see that we’ve basically been handed a very simple type confusion vulnerability. In the real world, we’d likely need to identify improper side effect modeling and come up with some clever input to trick the compiler into believing a map check is unnecessary. In this case, that work has basically been done for us. This is a lot less complex than a real-world bug would likely be, but it’s a good choice for an introductory blog post like this.

Let’s make sure we understand the strategy here to trigger the bug. This patch is ensuring that the map-checking speculation guard is removed. To abuse this, we’ll JIT the function with one type of argument, but then pull a switcheroo by providing a different type of argument after the function has been JITed. The map of our new object won’t be checked, so the optimized code will never trigger a deoptimization bailout; in turn, the lack of bailout will lead to a type confusion.

Our next step is to come up with some object types that would be useful in a type confusion. Which type are we going to make the JITed function expect, and then which type are we going to swap in once we’ve got some optimized code? There are probably quite a few answers that would work, but in this case, we’ll take the approach of making the JITed function expect a 64-bit float array, but then call the JITed function with an array of objects instead. An array of objects is actually an array of pointers in V8 (see https://stackoverflow.com/a/75762917), so a type confusion between a float array and an object array would allow leaking pointers. We’re only striving to get to the point where we can trigger the bug, so we’re not going to cover the specific exploitation details here. If you want more info on this exploitation method, you should check out the following blog post, since the approach in this blog post is heavily based on it: https://www.willsroot.io/2021/04/turboflan-picoctf-2021-writeup-v8.html

Here’s some code that will trigger the bug:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

function to_jit(obj){ // to prevent TurboFan from inlining this function, let's make it seem more complex by adding a loop // the functionality here is irrelevant; I based this on the functionality saelo used here: https://gist.github.com/saelo/52985fe415ca576c94fc3f1975dbe837#file-pwn-js-L253 var prevent_inlining_int = 500; for (var i = 0; i < prevent_inlining_int / 2; i++){ if(prevent_inlining_int % i == 0) var z = 5; } return obj[0]; } test_obj = {"x":1,"y":2,"z":3}; float_array = [1.1,2.2,3.3]; obj_array = [test_obj,test_obj,test_obj]; // now let's make the function hot so that TurboFan compiles it console.log("JIT the function"); for (var i = 0; i < 0x20000; i++){ to_jit(float_array); } // now let's compare the output between an expected type (a float array) and an unexpected type (an array of objects) console.log(to_jit(float_array)); // should return 1.1 console.log(obj_array[0]); // should return [object Object] console.log(to_jit(obj_array)); // send an object with a different map and trigger the bug |

Here’s what happens upon running that code:

|

1 2 3 4 5 |

wintermute@wintermute-VirtualBox:~/turboflan$ ./d8 pwn.js JIT the function 1.1 [object Object] 5.753374822877835e-270 |

We’ve triggered the bug successfully! The correct output when viewing obj_array[0] should be [object Object], but instead we’re receiving a float (because the JIT compiler speculated that the function’s argument would always be a float array, and there’s no check to see if the map doesn’t match). From here, we could focus on determining what content we’re leaking and converting this type confusion to read/write primitives. However, this post is only focused on providing an introduction to JIT compiler bugs and isn’t intended to be an intro to more general V8 exploitation, so we’ll conclude our examination of this bug here. Readers interested in the full exploitation process for this challenge should check out the following blog posts:

https://www.willsroot.io/2021/04/turboflan-picoctf-2021-writeup-v8.html

https://blog.joshdabo.sh/2021/04/18/picoctf-turboflan/

Conclusion

This blog post provided an introduction to JIT compilers in JavaScript engines, including the motivation for using them and high-level details on how they work. We examined how to perform basic debugging of JITed code and took a look at the tool Turbolizer, which is helpful for visualizing the various optimization phases performed by TurboFan, a JIT compiler in V8.

We discussed common vulnerabilities introduced by JIT compilers and examined how to trigger an extremely simple JIT vulnerability from a CTF challenge. We also learned that JIT vulnerabilities are generally considered logic bugs that often lead to memory corruption as a side effect, and that JIT bugs frequently offer powerful exploit primitives that can lead to full exploitation. Hopefully, this post helped make this area of security seem a little bit less overwhelming and inspired you to dig into it further.